August 26, 2020

General

Cloud Storage Best Practices for Enterprise Development

Whether you’re looking for cost savings or higher drive capacity, cloud storage has solutions for any enterprise. Even with its advantages, cloud storage requires a different approach compared to configuring internal network storage. Misconfigurations could leave your organization data open to attackers, and unorganized management could lead to unnecessary costs. Following these best practices eliminates most of the pitfalls an organization can experience integrating cloud storage into applications, infrastructure, and failover strategies.

Why Use Cloud Storage?

Before you create strategies around storage, the first step is to determine the use case specific to your organization. Every organization has their own goals for storage, but some common reasons for cloud storage implementation include:- Software as a Service (SaaS): Software that runs in the cloud can more efficiently and conveniently store and retrieve data from cloud storage to support global customers.

- Large data collection for analysis: Big data analytics relies on large amounts of collected unstructured data that could reach petabytes of storage capacity. Cloud storage saves on costs and scales automatically as needed.

- SD-WAN infrastructure: Companies with several geolocations can speed up performance on cloud applications using a software-defined wide-area network (SD-WAN) that implements cloud storage integrated into the infrastructure.

- Scaled local storage: Adding network drives to internal storage is expensive. Cloud storage can be added within minutes to internal infrastructure and only costs a fraction of the price of local drives.

- New development: Developers can take advantage of cloud storage without provisioning expensive infrastructure locally. When a project is ready to be deployed, it’s easy to deploy on existing cloud infrastructure.

- Virtual desktops (VDI): VDI environments must scale as users are added to the network. Cloud storage lets businesses scale their user environments using VDI.

- Email storage and archiving: Email communications must be stored for compliance and auditing. Accumulated messages and attachments require extensive storage space that cloud storage can manage.

- Disaster recovery: Cloud storage can be used for backups or failover should local storage fail. Using cloud storage for disaster recovery can significantly reduce downtime.

- Backups: Cloud storage is probably best used for backups. Combined with disaster recovery, cloud backups offer businesses a way to have complete backups stored off-site in case the office suffers from a natural disaster where internal hardware is destroyed.

Strategies and Best Practices

After your use case is determined, strategies for your cloud storage configurations can be analyzed. Many of the strategies and best practices revolve around cybersecurity and configurations, but others determine the way you should manage your cloud storage and organize files. Not every strategy is necessary, but the following best practices will help administrators get started provisioning, configuring, and managing cloud storage.Consider at least two providers

If your goals are to store application data, it might be worth investing in at least two providers. Most cloud providers offer extensive uptime guarantees but having one cloud provider leaves the corporation open to a single point of failure. In 2017, a human error caused an outage on Amazon Web Services (AWS) storage in their US-EAST-1 region. It’s rare for AWS to fail, but it is a possibility. If your application relies on only one provider, it could mean downtime for the application until the cloud provider can recover. A second provider can also be configured as a failover resource. Should the primary cloud provider fail, a secondary service provider can take over. For instance, Microsoft Azure or Google Cloud Platform (GCP) could be used as failover for AWS. Note that this would add considerable costs to the enterprise, but it also could save thousands of dollars due to cloud provider downtime.Review Compliance Regulations

Several compliance regulations require cybersecurity and standards in place for the way organizations manage customer data. Any personally identifiable information (PII) must be stored in encrypted form and monitored and audited for unauthorized access. The European Union’s General Data Protection Regulation (GDPR) requires that businesses allow customers to request deletion of their data. PCI-DSS oversees merchant accounts and financial transactions. Review any regulatory standards that could fine the business for poor cloud storage management. When choosing a cloud provider, check that they are Service Organization Controls (SOC) 3 compliant. SOC 3 cloud providers must offer transparency reports to the public on the way security and infrastructure are managed. The provider’s data centers should also be Tier 3. Tier 3 data centers provide a 99.982% uptime guarantee, which is only 1.6 hours of downtime per year.Keep Strict Access Control Policies

Even large, well-known organizations make the mistake of leaving cloud storage publicly accessible, which leads to large data breaches. You don’t need to be a hacker to find open access AWS buckets. Online scanning tools let anyone find open publicly available data. Ensure that the cloud storage isn’t open to the public, but this configuration isn’t the only access control policy needed. Folders and files stored in the cloud should have the same strict access controls as your internal data. Cloud providers offer account access and management tools, and many of them integrate with internal services such as Active Directory. Use permissions based on the least privilege standard, which says that users should only have access to files necessary to perform their job functions. This standard helps reduce the chance for privilege escalation and stops attackers from traversing the network freely on a high-privilege account.Use Cryptographically Strong Algorithms for Encryption

Whether it’s your own standards or for compliance, always use encryption for data storage on sensitive information and PII. Weak cryptographic libraries leave the data open to dictionary brute-force attacks, so it’s just as important to use the right algorithms. Encryption adds some performance overhead, so take performance into consideration. Advanced Encryption Standard (AES) 128-bits is a cryptographically secure symmetric algorithm often used in data storage. AES 256-bits is also available for a higher level of encryption protection, but it suffers from performance degradation. For password storage and one-way hashing, the Secure Hash Algorithm (SHA) 3 standard is available.Organize Data and Archive Unused Files

Organization of folders and files will help administrators determine if they should be backed up, if the folders contain sensitive information, and if they can be archived. Archived data is moved and deleted from its original storage location so that an audit can be done should the organization need to review it in the future. Archives can be compressed when stored, so archiving unused data is useful in cost savings. It’s also beneficial in determining access controls across large folder trees. Organized folders make every aspect of storage management easier for administrators, so a policy on the way folders should be set up will improve cost savings, backup strategies, and archive management.Set Up a Retention Policy

Retention policies are common for backups, but cloud providers also offer retention policies in case users accidentally delete data. Instead of permanently deleting data, a retention policy on cloud storage will hold it for retrieval and recovery for a set amount of time before permanently deleting it. This strategy saves administrators time so that they do not need to recover data from backup files.Use the Access-Control-Allow-Origin Header for Strict Access Controls from Web Requests

Cross-Origin Resource Sharing (CORS) is a security standard that restricts access to external resources. If your application reads data from cloud storage, you must allow access to it using the Access-Control-Allow-Origin header. Some developers use the asterisk (‘*’) in the Access-Control-Allow-Origin header value, which tells the cloud storage bucket to allow any application to read from it. This permissive misconfiguration leaves bucket data open to any attacker-controlled site. For example, it’s not uncommon for developers to use the XMLHttpRequest object to retrieve external data in JavaScript. When making the request, the browser does a preflight request to determine if the application has permission. If the domain is included in the Access-Control-Allow-Origin header, the request continues. Otherwise, the browser’s CORS restrictions reject the request. To use a domain example, suppose your domain named yourdomain.com makes a request to an AWS bucket. Your AWS bucket should be configured to allow only yourdomain.com applications to retrieve data. AWS, GCP and Azure have these controls available to developers. The following Access-Control-Allow-Origin header would be the proper way to allow your application and disallow any others: Access-Control-Allow-Origin: https://yourdomain.com Should an attacker send a phishing message to users and attempt to launch a Cross-Site Request Forgery (CSRF) attack, the attacker’s application call would be blocked due to the above header configuration.Configure Monitoring Across All Storage

Monitoring is not only a part of compliance requirements, but it will keep administrators informed on file access activity. Every major cloud provider offers monitoring controls, and they can be beneficial when attackers compromise infrastructure. It can reduce damage from an ongoing attack, or it can stop an attacker from continuing vulnerability scans looking for exploit opportunities. Organizations can use monitoring tools for more than just cybersecurity. Monitoring can tell administrators if data was accidentally deleted, help identify a failure, audit file access, and determine current storage capacity and if it needs to be increased.Conclusion

Cloud storage has several benefits for organizations, but the way it’s managed and configured plays a big role in its successful implementation. It saves on IT costs, but it also can cost organizations millions of dollars should the infrastructure be misconfigured. Before implementing cloud storage in your software deployment or backup strategy, take the time to prepare access policies, organization standards, and a monitoring setup.Related Articles

Comprehensive AI Security Strategies for Modern Enterprises

Over the past few years, AI has gone from a nice-to-have to a must-have across enterprise operations. From automated customer service to predictive analytics, AI technologies now handle sensitive data like never before. A Kiteworks report shows that over 80% of enterprises now use AI systems that access their most critical business information. This adoption…Learn More

Building Trust in AI Agents Through Greater Explainability

We’re watching companies leap from simple automation to an entirely new economy driven by self-governing AI agents. According to Gartner, by 2028 nearly a third of business software will have agentic AI built in, and these agents will be making at least 15% of everyday work decisions on their own. While that can significantly streamline…Learn More

Machine Learning for Fraud Detection: Evolving Strategies for a Digital World

Digital banking and e-commerce have changed how we transact, creating new opportunities for criminals. Businesses lose an estimated $5 trillion to fraud each year. The sheer number of fast-paced digital transactions is too much for older fraud detection methods. These traditional tools are often too slow and inflexible to stop today's automated threats. This new…Learn More

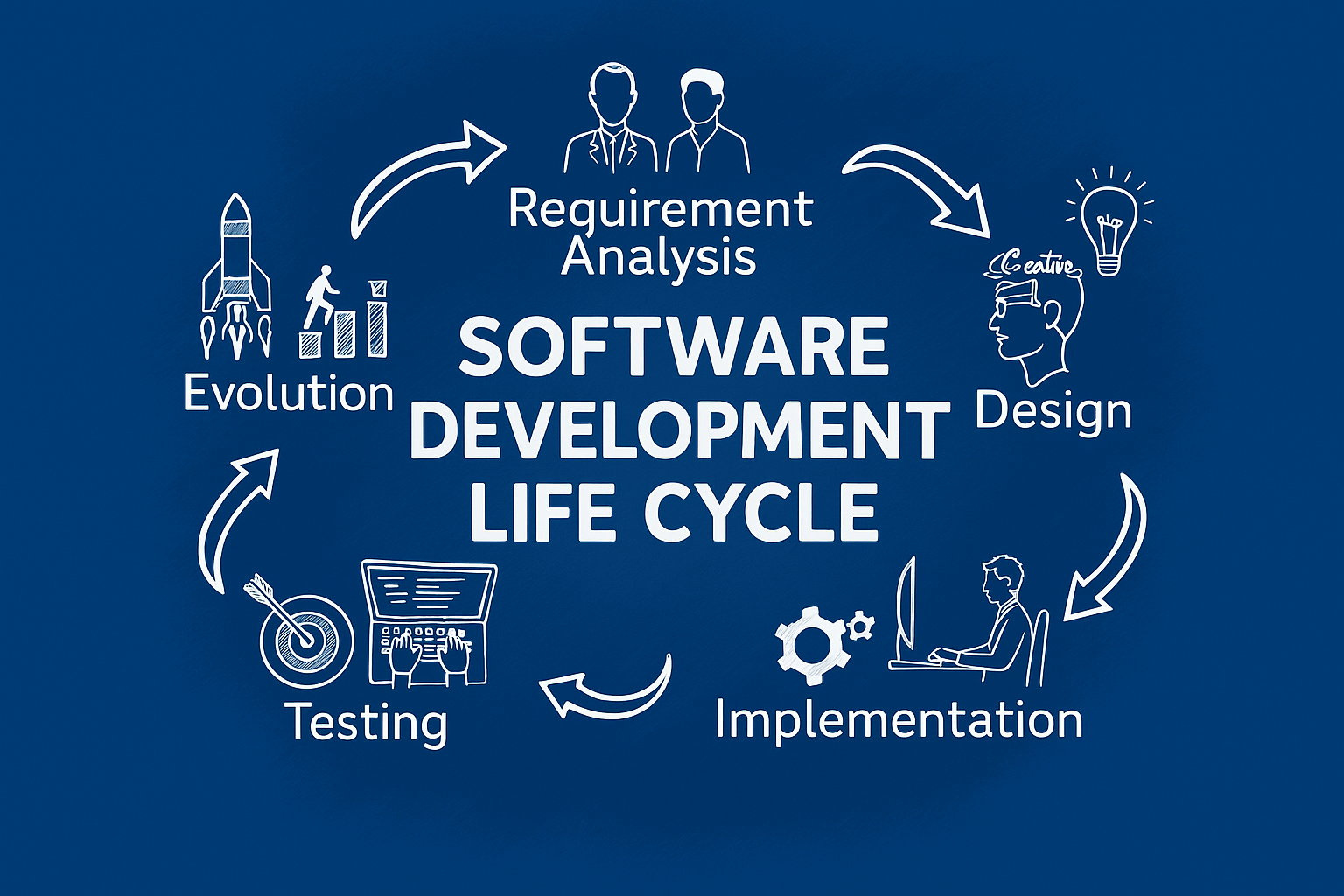

Software Development Life Cycle (SDLC): Helping You Understand Simply and Completely

Software development is a complex and challenging process, requiring more than just writing code. It requires careful planning, problem solving, collaboration across different teams and stakeholders throughout the period of development. Any small error can impact the entire project, but Software Development Life Cycle (SDLC) provides the much needed support to overcome the complexities of…Learn More