Addressing Key Scalability Challenges in Microservices Architecture

Microservices architecture is a structural approach to application development in which components are divided into small and independent blocks — services. Each of these services performs specific functionality and operates as a separate process.

As the application and its services grow, there is an increasing need for scaling. At this stage, developers start facing various challenges. These challenges may be related to managing and supporting the entire infrastructure, configuring the interaction of microservices, monitoring, or other important aspects of the scaling process.

In this article, we will define the key characteristics of microservices, observe the challenges that may appear while scaling, and provide best practices for addressing these challenges.

What are microservices?

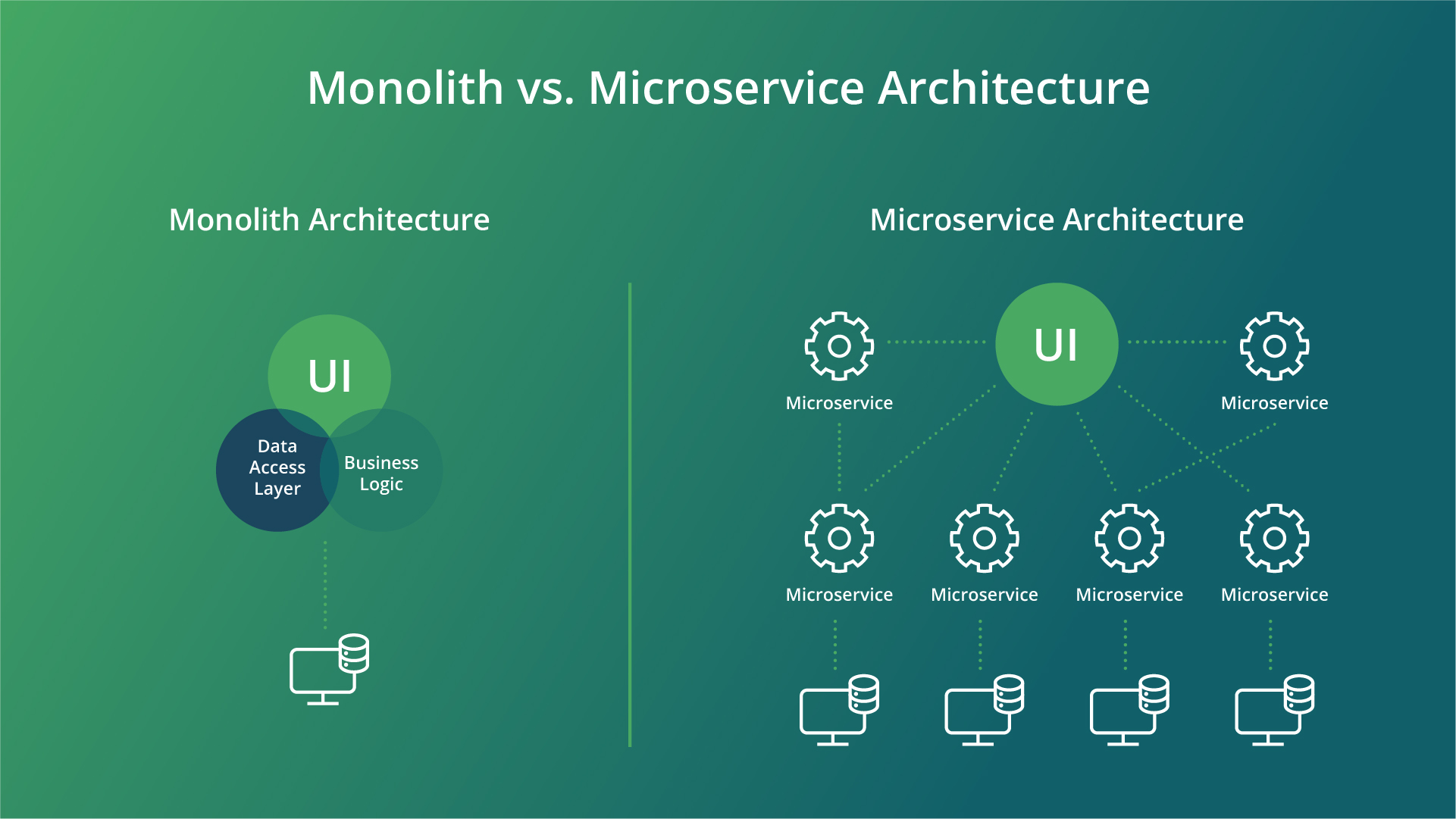

Microservices are an architectural pattern in software development that consist of loosely coupled services communicating with each other through lightweight protocols. The essence of microservices is best understood by comparing or even contrasting them with a large application, a monolith.

Above is a visual representation of monolith versus microservice architecture. To further elaborate on that, let’s look at eight distinctive features of microservices.

Small size

Microservices are typically small and focused on specific functionalities or business capabilities.

Independence

Each microservice operates independently, allowing for separate development, deployment, and scaling.

Built around business need and bounded context

Microservices are designed to closely align with specific business requirements and utilize bounded contexts to define their scope.

Interaction through smart endpoints and dumb pipes

Microservices communicate with each other over the network, following the principle of having smart endpoints capable of processing data and dumb pipes for simple data transmission.

Design for failure

Microservices embrace their distributed nature and are designed with fault tolerance and resilience in mind.

Minimized centralization

Microservices architecture reduces centralized components, promoting decentralization and autonomy.

Automation in development and maintenance

Automation plays a crucial role in the continuous integration, deployment, and management of microservices.

Iterative evolution

Microservices architecture facilitates iterative development and evolution, allowing for incremental changes and updates to individual services.

Microservices scalability: the key challenge

Scaling a monolithic application is relatively simple: you just add more resources as the volume of transactions grows. A load balancer helps distribute the load across multiple identical servers, which typically host the entire application.

However, with a microservices architecture, each service can be scaled independently. In contrast, even if you’ve only modified one part of a monolithic application, you may still need to scale the entire application. In response to increased load, you can deploy additional instances of the application to distribute the load evenly. Scaling a microservices-based application, however, is more complex and requires a different approach than scaling a monolith.

A microservices-based application comprises various services and components. To scale it, you can either scale the entire application simultaneously or identify and scale specific components and services individually.

How to address microservice scalability challenges: crucial steps

Below we will reveal best practices for developers to address challenges while trying to scale microservice-based applications.

Step 1. Coordination

As the number of microservices grows, so does the complexity of managing them. In this context, coordination of services within a microservices architecture is crucial. It involves managing communication and interaction among individual components across a distributed system. Three critical aspects of service coordination essential for scalability are provided below.

- Service discovery

As applications comprise numerous microservices, each with dynamically changing IP addresses or URLs, ensuring efficient communication poses a challenge. Service discovery technology addresses this by automatically detecting and registering available microservices, simplifying interaction, and reducing manual configuration.

DNS-based, client-side, and server-side discovery are some of the common approaches within the implementation of service discovery. Notable tools implementing these mechanisms include Etcd, Consul, Kubernetes, and Eureka.

- Load balancing

Another critical aspect of service coordination is load balancing. As the volume of requests and users increases, certain microservices may become overloaded. To mitigate this, organizations must evenly distribute requests among multiple service instances. Dynamic load balancing technologies adapt to changing traffic patterns, adjusting their load distribution strategies accordingly.

Widely used tools implementing this technology include Kubernetes, NGINX, HAProxy, and Traefik.

- Inter-service communication

Interactions between microservices play a pivotal role in their scalability, allowing services to exchange data to ensure the full functionality of the application.

The common microservices communication patterns are:

- HTTP/REST API

Each microservice provides an API using the HTTP protocol, receiving requests from other services. Interaction is based on REST principles, where each resource has its URL and supports various operations (GET, POST, PUT, DELETE, etc.) for accessing data.

- Message brokers

Another method of inter-service communication is through message brokers, acting as intermediaries for message exchange between microservices. When one microservice needs to pass information to another, it sends a message to the broker, which then delivers it to the recipient. Examples of message brokers include Apache Kafka and RabbitMQ.

- gRPC

gRPC is a modern Remote Procedure Call (RPC) protocol developed by Google. It enables efficient and fast interaction between microservices, utilizing the HTTP/2 protocol for data transmission.

Step 2. Data consistency

Ensuring data consistency becomes a complex task in a distributed environment where each microservice updates its data independently. In traditional monolithic architecture, transactions are easily managed.

However, in a microservices architecture where each microservice may have its own database, executing distributed transactions becomes challenging. Using ACID (Atomicity, Consistency, Isolation, and Durability) transactions is not entirely suitable for microservices architecture, as this approach can lead to coordination complexity, performance and flexibility limitations, and increased database load when scaling.

Below are common approaches that can help ensure data consistency when scaling microservices:

- Saga pattern

Instead of one large transaction, the saga pattern involves a sequence of small, local transactions in each microservice that interact with each other to ensure data consistency.

- CQRS

CQRS involves separating write operations (commands) and read operations (queries) into separate models. This allows for optimizing data reading and writing, as well as providing a more flexible data access model.

Step 3. Performance improvement

When scaling microservices and handling a large number of requests, performance issues may arise. Below, we’ll list a series of actions to help improve performance.

- Data caching

Data caching involves storing the results of queries. If a similar request is made in the system, the data will be retrieved from the cache rather than the original source. Caching can significantly reduce the load on the database and decrease the response time of microservices.

- Asynchronous processing

Utilizing asynchronous processing can enhance the performance of microservices, especially in cases where requests require prolonged processing time or are related to external services. Instead of blocking the calling service until the operation completes, the microservice can perform the operation asynchronously and send the response later.

- Horizontal scaling

Horizontal scaling involves adding additional instances of microservices on different servers or containers. This ensures higher fault tolerance and allows for load balancing across different instances.

- Vertical scaling

Vertical scaling involves increasing resources on a single server or container to achieve higher performance. However, this method is not as efficient as horizontal scaling.

- Monitoring and optimization

Continuous monitoring of microservices’ performance and analyzing metric results help identify performance bottlenecks. For this purpose, you can use specialized tools such as Helios, Prometheus, New Relic, and others.

Step 4. Choosing the right infrastructure

Selecting the appropriate infrastructure significantly influences the scalability of microservices, as it provides essential resources and deployment tools. The choice of available tools depends entirely on your project’s requirements and the functionality of its microservices.

Consider the following aspects when choosing infrastructure:

- Containerization and orchestration

Utilizing containers like Docker streamlines the packaging and delivery of microservices along with their dependencies. Containers create an isolated environment for each microservice, ensuring consistency and resilience across various configurations. Container orchestration, such as Kubernetes, simplifies management, deployment, and scaling, while also offering automatic recovery in case of failures.

- Cloud platforms

Public cloud platforms offer flexible and scalable infrastructure for hosting and managing microservices. You can rent a cloud server from platforms like Timeweb Cloud and deploy your project on it.

- Cost evaluation

Analyze the costs associated with different cloud platforms and services to optimize infrastructure expenses. This evaluation process is crucial for reducing deployment costs and subsequent scaling expenses.

- Long-term scalability

Ensure that the selected infrastructure can seamlessly expand and adapt to the growing requirements of your application in the future. This approach helps avoid unnecessary complexities as the application evolves. Choose infrastructure that supports project growth through features like automatic microservices scaling, monitoring, resource management, and load balancing.

Step 5. Monitoring and observability

Monitoring and observability play a crucial role in the successful operation of microservices architecture. They allow you to identify issues, track performance, analyze data, and take measures to optimize the system. Below are the main aspects and monitoring tools worth paying attention to.

- Request tracing

Request tracing allows you to track the path of a request from the client to the microservices. This is especially useful in complex systems where a request may pass through multiple services before receiving a response. Request tracing helps identify performance bottlenecks, latency issues, and other complexities.

To facilitate system optimization and debugging, you can use tools like Helios, Datadog or Jaeger to implement request tracing.

- Logging

Logging involves recording events, errors, and other useful information occurring in microservices. Well-structured logs facilitate analysis and issue detection in the system. ELK Stack and Fluentd are examples of logging tools.

- Metric monitoring

Metric monitoring allows you to collect and analyze performance metrics, resource utilization, and other statistical information about microservices. Examples of tools for collecting and visualizing metrics include Prometheus and Grafana.

- Event management and alerting

Event management and alerting are used to automatically respond to specific events or states in the system. They help promptly detect and alert about errors, failures, or other issues that may affect the application’s performance. For example, you can use Prometheus Alertmanager or PagerDuty.

Step 6. Microservices resilience

In microservices architecture, where each service operates independently of others, it’s important to implement mechanisms that ensure stable and reliable system operation even in case of failures and errors in individual services. This is especially relevant when it comes to microservices scaling, as the process becomes more complicated with a significant increase in the number of services.

Below are some aspects and tools that will help you establish microservices resilience.

- Fault tolerance

Fault tolerance in microservices architecture refers to the system’s ability to continue functioning reliably in the event of failures or errors. This approach aims to minimize the impact of individual faulty components on the overall system operation by using appropriate deployment strategies, load balancing, and fault handling.

- Fault detection and recovery

Fault detection and recovery mechanisms allow for timely detection of failures and errors in the system, as well as restoring the application’s functionality. For example, Kubernetes provides such mechanisms.

- Retry mechanisms

Retry mechanisms allow you to repeat a request to a service if it was unsuccessful. This helps reduce the impact of temporary failures or overloads on the application and improves the likelihood of successful operation completion. For example, such mechanisms are implemented in Spring Retry.

Step 7. Security and access control

As an application grows and the number of its microservices increases, it becomes more vulnerable to security breaches. It’s important to understand that implementing special mechanisms and methods can significantly enhance the security of the project as it scales. Here are some examples:

- Authentication and authorization

You can implement reliable authentication and authorization mechanisms. For example, OAuth 2.0 or OpenID Connect protocols, as well as JSON Web Tokens for creating access tokens.

- Data protection

Data protection includes mechanisms that ensure the confidentiality, integrity, and availability of data in microservices architecture. These include:

- TLS/SSL — TLS (Transport Layer Security) or its predecessor SSL (Secure Sockets Layer) encrypt data during transmission between the client and the server.

- API Gateway — can provide client authentication and authorization before accessing microservices, control access to various API endpoints, and provide data encryption mechanisms.

- Security audits and testing

Conducting security audits and penetration testing using tools like Metasploit, OpenVAS, or Nessus can significantly enhance the security of your application.

Conclusion

In this article, we have outlined a comprehensive guide to scaling microservices, including potential challenges that developers may encounter and tools to address them. Proper scaling ensures the stable operation and high performance of the entire system.

Kanda Software has assisted a wide range of partners and customers in achieving their scalability goals. With the help of our experts, your company will be able to adhere to the best practices in microservice scaling, guaranteeing user satisfaction, and staying ahead of competitors.

Are you ready to tap into the limitless scalability potential? Talk to us today!